What is Amazon Athena?

Amazon Athena is a service that enables a data analyst to perform interactive queries in the AWS cloud on data stored in AWS S3. Because Athena is a serverless query service, an analyst doesn’t need to manage any underlying compute infrastructure to use it.

Benefit

• Serverless service to perform analytic directly against s3 files

• Uses sql language to query the files

• Supports csv, json, orc, avro and parquet

• Charged per query and amount of data scanned (example: $5 per terabyte scanned by queries. )

Here are simple way to setup VPC flow logs from AWS S3 direct querying with Athena.

Prerequisite

- AWS Account & basic understanding of AWS Cloud technology

- Deploy EC2 instance with http service to public

- Create new Security group with http port 80 to everywhere and ssh port 22 to just your own ip address. No other ports to open

- Create S3 bucket Default setting without Public access

- SQL basic knowledge

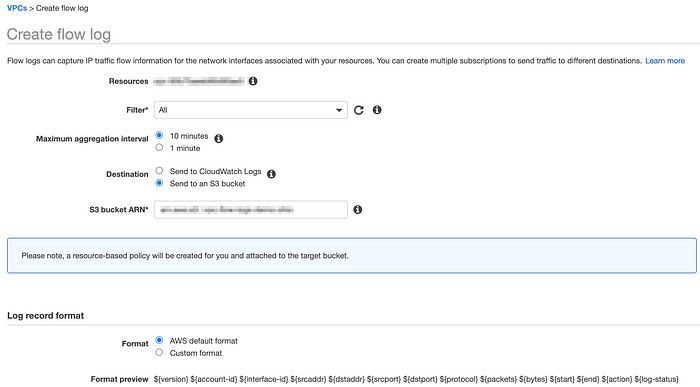

Configure / setup VPC Flow logs

- Select VPC which EC2 instance was deploy

- Select Flow logs tab and click Create

- At Filter area select ‘Accept/Reject/All’

- Select ‘10’ minutes at Maximum aggregation interval

- Select ‘Send to S3 bucket’ at Destination

- Select ‘AWS default format’ at Logs record format area

- Tags field optional/not require

- Click ‘Create’

- Wait for at least 1 hour and in the mean time let http web server up and running

Setup Athena Query

- Go to AWS Athena service

- Choose Database as Default

- Enter 1st Query (see below) replace correct S3 bucket url, AWS account number and region (e.g: us-east-2) under Location area. This will create tables

CREATE EXTERNAL TABLE IF NOT EXISTS vpc_flow_logs (

version int,

account string,

interfaceid string,

sourceaddress string,

destinationaddress string,

sourceport int,

destinationport int,

protocol int,

numpackets int,

numbytes bigint,

starttime int,

endtime int,

action string,

logstatus string

)

PARTITIONED BY (`date` date)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY ‘ ‘

LOCATION ‘s3://your_log_bucket/prefix/AWSLogs/{account_id}/vpcflowlogs/{region_code}/’

TBLPROPERTIES (“skip.header.line.count”=”1");

- This second query will create/add partition. Below query replace YYYY-MM-dd with correct day. At location area S3 url, account id, regional and correct date. (Hint: replace correct date on both lines)

ALTER TABLE vpc_flow_logs

ADD PARTITION (`date`=’YYYY-MM-dd’)

location ‘s3://your_log_bucket/prefix/AWSLogs/{account_id}/vpcflowlogs/{region_code}/YYYY/MM/dd’;

- Third query 1st 100 lines of log (Replace with correct date)

SELECT *

FROM vpc_flow_logs

WHERE date = DATE(‘2020–08–15’)

LIMIT 100;

- This fourth query will pull 1st 100 Rejected and Protocol 6, you can replace anything from 2nd query result (Table)

SELECT day_of_week(date) AS

day,

date,

interfaceid,

sourceaddress,

action,

protocol

FROM vpc_flow_logs

WHERE action = ‘REJECT’ AND protocol = 6

LIMIT 100;

You can check those rejected IP Address at https://whatismyipaddress.com/ip-lookup see where are those people from.

Example of one Rejected IP

Finally, we are living in very dangerous world so need to protect our data. My piece of advise is setup web server in private subnet and use load balancer, which is one way easy way to protect your web server.

- Finally clean up, here how to Drop table command

DROP TABLE `vpc_flow_logs`;